Can your computer vision model detect subtle motion shifts, recognize overlapping actions, or track fast-moving objects across frames?

Generic video labeling falls short of what advanced AI training demands. In domains like autonomous vehicles, surveillance, or smart retail, where visual accuracy is mission-critical, AI faces challenges like object tracking in crowded or cluttered scenes, recognizing activities and gestures at varying motion speeds, or understanding complex, multi-actor interactions. To compete, these models must be trained to capture not just “what” is visible but also “when” and “how” it shifts.

At DataEntryIndia.in, we specialize in labeling complex visual datasets at scale—combining subject matter expertise, intelligent automation, and a human-guided QA process. Our video annotation services empower advanced computer vision models for accurate object tracking, activity recognition, gesture detection, and scene understanding.

What sets us apart? The ability to efficiently handle edge cases and maintain annotation consistency across massive video datasets. By leveraging techniques like multi-frame interpolation, keyframe labeling, and automated tracking across frames, we maintain efficiency in large-scale video annotation projects without compromising quality.

Partner with a reliable video annotation company to ensure accuracy the first time.

Our video annotation outsourcing services cover a wide range of labeling techniques, selected to align with your model’s learning objectives and domain complexity. As a specialized video annotation company, we deliver structured video training datasets designed around your AI use cases, not generic labels.

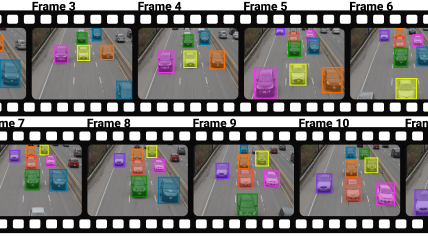

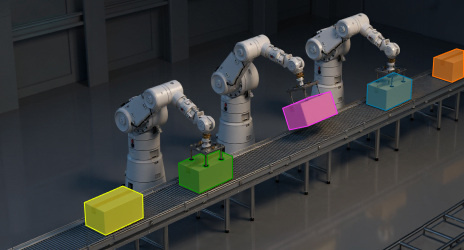

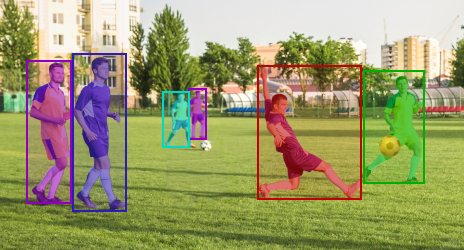

Our annotators specialize in drawing precise 2D and 3D bounding boxes around objects of interest, enabling AI systems to accurately detect, localize, and classify objects within video frames. Each box is frame-synchronized, occlusion-aware, and consistently aligned to preserve object identity over time. By providing high-fidelity spatial data, we enhance model robustness in complex environments, improving object recognition accuracy and contextual understanding for retail automation, AV perception, insurance damage assessment, and real-time vehicle navigation.

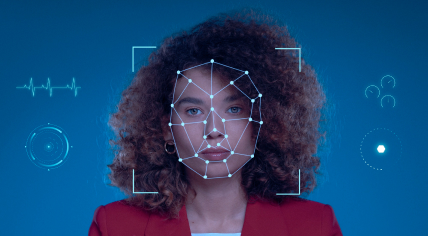

Our video data annotation experts label calibrated key points such as facial features, body joints, and fingertips across video sequences to help AI systems recognize structure, movement, and spatial relationships. Each annotation follows biomechanical or geometric constraints to train models in pose estimation, facial recognition, gesture tracking, and behavior analysis. Our team ensures anatomical consistency in video keypoint annotation, even under partial visibility, extreme motion, or camera shift.

We tag video components across frames into predefined classes (person, road, signage, vegetation, etc.) for detailed scene understanding, lane navigation, and environmental mapping in automated systems. Our annotators utilize edge-snapping tools, auto-fill techniques, and manual adjustments to segment dense scenes with overlapping objects, low-light conditions, and motion blur. Through temporal coherence validation, we ensure consistency in video semantic segmentation across transitions, even in high-motion or multi-camera footage.

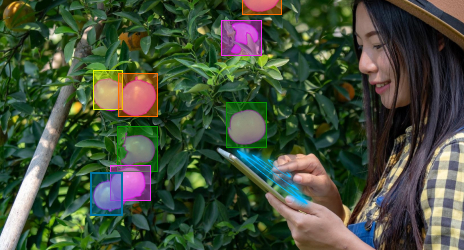

We trace object boundaries using tightly connected vertices to define irregular shapes with pixel-level accuracy, regardless of their complexity, overlap, or partial obscuration. Our video data labeling experts adjust vertex density based on curvature and motion variance, ensuring spatial precision without overfitting. This method surpasses bounding boxes in accuracy, enabling detailed object recognition for applications like drone navigation, retail analytics, and environmental monitoring.

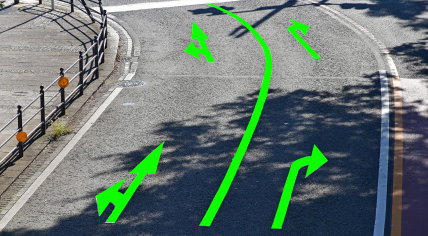

We label thin, elongated structures (such as roads, cables, pipelines, and lane markings) within video frames using directional polylines. Our polyline annotations include directional vectors, width parameters, and connectivity metadata that enable autonomous systems to understand navigable spaces and make informed routing decisions. When annotating video footage, our specialists maintain spatial alignment, consistent labeling across sequences, and curvature accuracy to ensure reliable frame-to-frame traceability.

We specialize in time-series annotation—capturing actions, transitions, and event sequences across continuous video streams. Each labeled segment is tagged with its start and end frames, total duration, and sequence order to preserve temporal context and avoid drift over. Through detailed and precise sequential sensor data annotation, we train AI models for use cases where understanding how events unfold over time is essential, such as surveillance incident tagging, sports performance analysis, or manufacturing line monitoring.

We assign predefined tags to each frame or batch of frames based on scene content, lighting condition, anomaly presence, or event type. This helps classify scenarios (such as day/night, normal/suspicious, and occupied/vacant), enabling faster dataset curation and real-time model filtering. Through video segment-level annotation, we support rapid indexing, event detection, and content filtering, enabling efficient retrieval and analysis in large-scale video datasets.

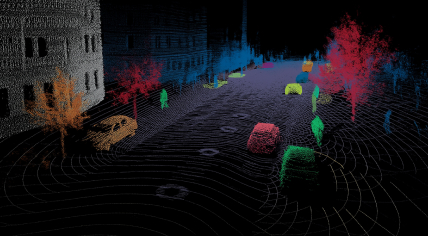

We annotate LiDAR or depth-sensor data by adding attributes like orientation, velocity, and classification. Leveraging bounding boxes, semantic labels, and instance segmentation, we maintain geometric accuracy across multiple sensor viewpoints. This comprehensive 3D point cloud annotation enables AI-powered autonomous vehicles, robotics systems, and spatial analysis applications to accurately understand complex environments.

We outsourced a high-volume video labeling project and were impressed by the consistency and accuracy across thousands of annotated frames. The team adapted quickly to our workflow and delivered training data before the desired timeline for quick deployment.

Handling live video annotation at scale isn’t easy, but DataEntryIndia.in managed it with incredible precision and low latency. The annotated video datasets gave our real-time surveillance AI the edge it needed to flag events accurately as they occurred.

Their expertise in video annotation for robotics played a key role in improving our AGV’s navigation system. From labeling lane markers to dynamic obstacles, they delivered context-rich training datasets. Our path planning models are now more reliable in real-world environments.

We follow an established process to accurately annotate video data. The stages involve:

From popular third-party or open-source tools to proprietary software, our video annotation teams are equipped to operate within your preferred system. We ensure seamless tool adoption while maintaining annotation accuracy and complete data security.

Explore real-world success stories where our data annotation expertise enabled clients to overcome complex labeling challenges and scale their AI initiatives.

We’ve earned the trust of global AI teams by delivering video labeling services tailored for real-world complexity. Our scalable workflows, domain expertise, and multi-stage QA reduce model failure risks and accelerate time to market.

We operate within an ISO 27001-certified infrastructure, enforce strict NDAs, and follow role-based access control for sensitive video data. All workflows are aligned with global compliance standards, including HIPAA, GDPR, and industry-specific data handling protocols.

Our annotation teams are trained on domain-specific taxonomies and visual nuances—be it robotic navigation, medical imaging, or retail surveillance. They apply task-specific logic to eliminate common labeling errors and consistently deliver training datasets aligned with your use case.

Our video annotators are specialized in handling complex scenarios such as overlapping objects, motion blur, or partial visibility. These edge cases are flagged, verified manually, and documented to maintain accuracy and consistency across similar events in large video datasets.

While we leverage automated tools for video labeling, we don’t solely rely on them. Our subject matter experts are involved at every stage, from defining class hierarchies to validating each annotation for contextual accuracy and consistency.

Whether it’s a short-term annotation sprint or an ongoing, high-volume video labeling project, we offer flexible pricing models that align with your project scope, delivery timelines, and evolving data needs.

Get detailed reports (on your preferred frequency) with insights on accuracy score, rejection reasons, and annotation velocity. A dedicated project manager serves as your primary point of contact, facilitating rapid query resolution and regular project updates.

Yes. We offer correction, refinement, and augmentation of pre-labeled datasets. This includes fixing label errors, adding missing annotations, or layering additional metadata like timestamps, object states, or interactions, based on your project needs.

Yes. We can add metadata such as object speed, behavior tags, state changes, or custom class attributes on a per-frame basis—critical for training AI models in behavioral recognition, robotics, or time-sensitive tasks.

Yes, alongside our core video tagging services, we offer transcription support for videos that require speech-to-text conversion, dialogue annotation, or audio event tagging.

We work with a wide range of industry-standard video formats, including MP4, AVI, MOV, and WEBM. We support all common resolutions—from SD to 4K—based on your project’s input quality.

Need More Clarity before Outsourcing Video Annotation?